Immersive Audio Series: Pt 1

Immersive audio has been in development for many decades which is why it is so exciting that it has developed into something that is more accessible to consumers and not just audio professionals and hi-fi audiophiles. I went back to some of my old grad school readings to help break down some of the foundations of spatial audio since I get asked about it a lot. These posts aren’t academic papers but just attempts at giving a basic understanding of the foundations. I’ll start by covering the three foundations of spatial audio (Psychoacoustic, Physical Acoustic, Auditory Neurology) and then expand on how this relates to today’s immersive technology. Ref 1

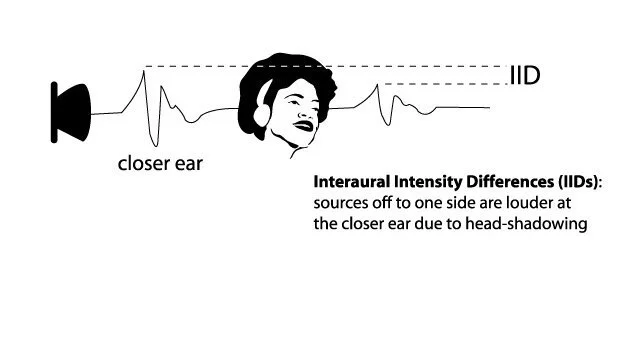

Today, I’ll just break down the psychoacoustic side. Ears are so unique to each individual that they can be used as an IDing method to identify people. Even further, each ear is different and is separated and shadowed by the head. Therefore, sound reaches each ear differently and greatly impacts spatial perception which is called Interaural Differences. These differences are further broken down into Interaural Time Differences and Interaural Intensity Differences.

Interaural Time Differences relate to the actual time differences between the ears. A sound coming from the right side of a listener will arrive at the right ear first than the left ear.

Ref. 2

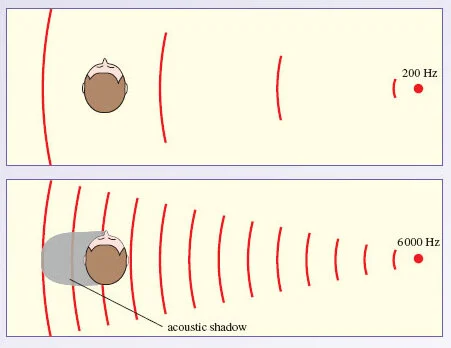

Interaural Intensity Differences relate to how the shape and shadow of the head affects the loudness and frequency distribution of sounds. A sound coming directly to the left ear will be louder on the left ear than the right ear. Furthermore, the head blocks higher frequencies because of how short those frequencies are. The longer low frequency wavelengths are able to diffract around the shape of the head.

Ref. 3

Ref. 4

These Interaural measurements are represented in Head Related Transfer Functions (HRTFs). HRTFs superimpose spatial characteristics on audio. HRTF measurements take into account how the human body (head, shoulders, ear shape, ear canals) affect sound cues. By using spatial coordinates for distance, elevation, and azimuth - sound can be better localized.

All of the above information contributes to binaural audio. Binaural audio is the method of recreating these spatial cues over headphones. It’s these HRTFs that make binaural audio possible. But as you might have picked up on, HRTFs are dependent on an individual’s head shape, ear shape, upper body build, etc. So what sounds good for one person might sound like absolute garbage to someone else. It’s very difficult to make a binaural experience feel realistic for all listeners. Here’s an example of HRTF differences (use headphones).

Why is this information important? With binaural audio becoming more accessible to consumers and as you might start to experiment with it at home, think about how frequencies may or may not translate to a binaural mix. For example, higher frequencies tend to relate to an object source above the head, so putting lower frequency sounds on that height plane might not sound accurate. This information might help you better choose which binaural plug-ins or software you prefer. Some might better or worse to you for a reason. That’s it for now!

Ref. 1 - Kendall, Gary S. A 3D Sound Primer: Directional Hearing and Stereo Reproduction. 1995.

Ref. 2 - Burk/Polansky/Repetto/Roberts/Rockmore. The Transformation of Sound by Computer: Localization/Spatialization.

Ref. 3 - McMullen, Kyla. Interface Design Implications for Recalling the Spatial Configuration of Virtual Auditory Environments. 2012.

Ref. 4 - https://www.open.edu/openlearn/science-maths-technology/biology/hearing/content-section-12.4. 2021